Why this release matters

This isn’t a polite point-update, it’s a gleaming overhaul that touches every surface, logic path, and pixel. Build times are slashed by 50 percent, bundle size by 68 percent, and suggestions appear before you finish a keystroke. Everything you see and everything you do in v1.2.0 has been reconsidered, refactored, and re-animated.

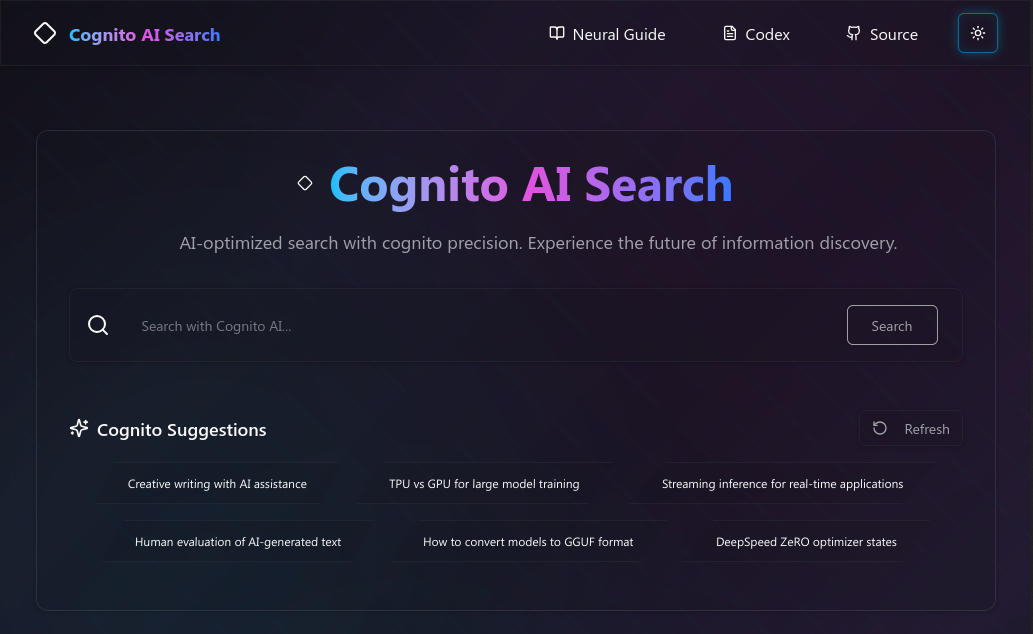

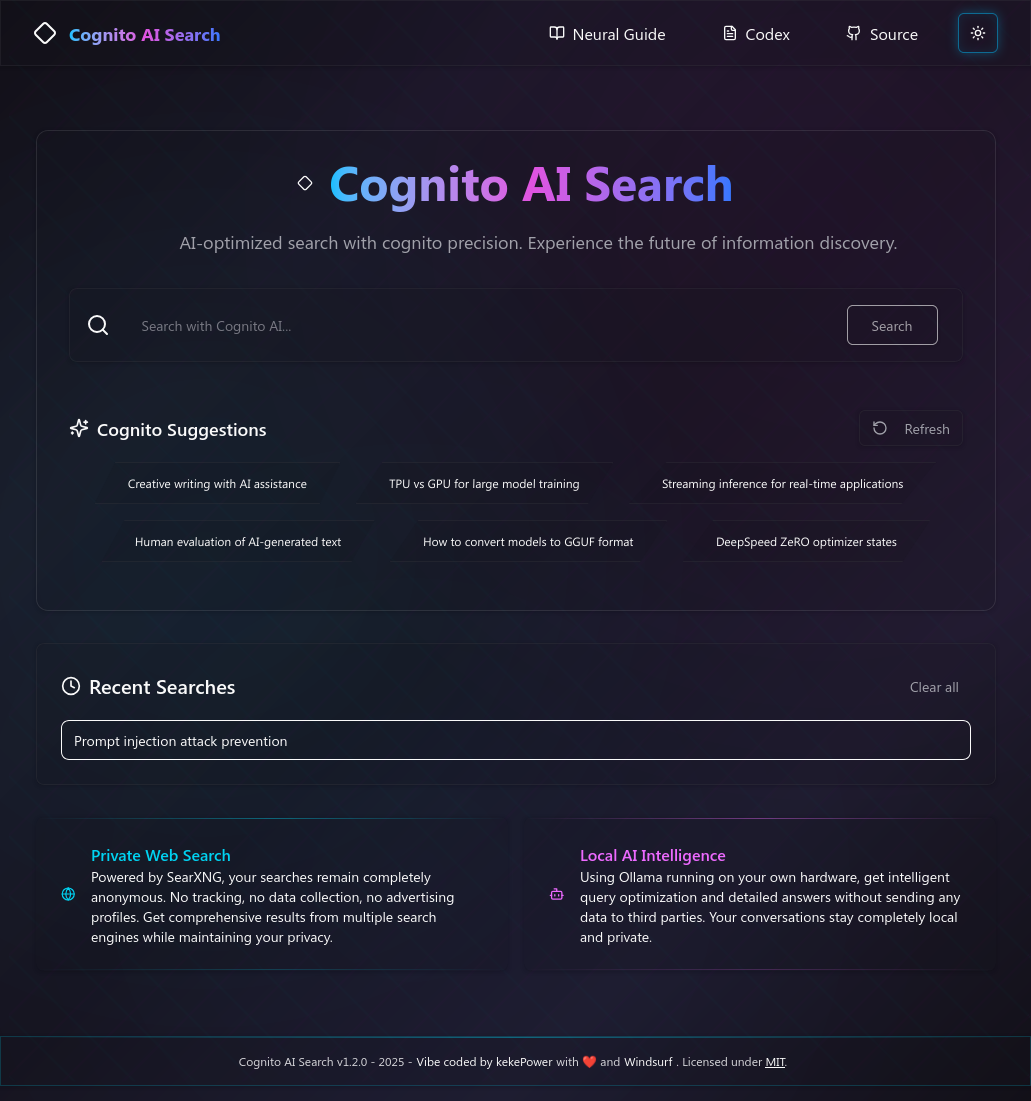

A brand-new holographic shard interface

Yes, that screenshot is the real thing, captured from today’s production build. The UI grew from a doodle of crystalline shards on a coffee receipt, and CSS clip-paths happily let me keep the idea. The new ** Holographic Shard Design System** wraps every component in polygonal edges with subtle glass-morphism; cyan, magenta, and electric-blue accents pulse in gentle glow animations, and it all respects your GPU. Whether you live in dark mode or the brand-new warm-cream light mode (still with a few quirks to iron out in upcoming point releases), the experience feels equal parts futuristic and friendly.

Performance leaps you can feel

Profiling became a nightly ritual. Thirty-eight unused components were trimmed, thirty-one dusty dependencies evicted, and a hundred-plus duplicate types banished. Builds that once clocked in at 4 s now wrap in 2 s, bundles are leaner, and a smart caching layer stops double-fetches cold. You won’t see it, but you’ll feel it in every click.

Architecture that loves developers

The API layer is now crystal clear: ** lib/api/ollama.ts** for AI, ** lib/api/searxng.ts** for search, and ** lib/api/types.ts** for shared contracts. React logic moved into reusable hooks, the suggestions engine graduated into its own module, and Next.js 15 Forms now handle submissions. Contributors spend less time spelunking, more time building.

Search experience polished to perfection

The query box received rom-com-level attention. Two hundred AI-centred suggestions live in neat buckets, hover states glide, and the Sparkles icon finally looks professional. Deterministic skeleton widths banished hydration mismatch logs, and if an AI call falters, the Retry button appears exactly where you expect with human-friendly messaging.

Power PDF export, now with LaTeX magic

Researchers, rejoice: PDF export renders LaTeX like a journal submission---inline math, display equations, syntax-highlighted code blocks, and tightened typographic hierarchy. Slide those PDFs into your next lecture or client brief with confidence.

Security and privacy first

Zero-tracking stays non-negotiable. All inference remains on local infrastructure, inputs are validated, and error messages guard sensitive data. HTTPS is enforced, CSP tightened, and Ollama calls run with * think:false* so nothing leaks remotely. Fast and private, no trade-offs.

How to upgrade

- Copy your existing

.env.localinto the new project, or just runcp env.example .env.localand edit it for your setup. - Run

pnpm installto update dependencies. - Build once with

pnpm build. - Serve it with

pnpm start. - Make sure Ollama is at least 0.9.0.

Everything else lives in the official v1.2.0 release page, including every closed Issue and edge-case migration note.

Light mode status

The warm-cream light theme is already live, but a handful of nested components still misbehave. Expect those quirks to disappear over the next point releases as we stabilise colour tokens and test wider content combos.

Call for feedback and contributions

Bugs, ideas, dream features---bring them on. Open an Issue or PR at github.com/kekePower/cognito-ai-search. The codebase is littered with comments, tests, and docs to make first-time contributions painless. Even a one-line fix gets CI confetti.

Signing off (and hitting deploy)

Cognito AI Search began as a weekend itch: private AI-powered search without data trade-offs. Today, after those intense nights of coding, it feels like a new creature---shaped by community wisdom, caffeine, and a stubborn refusal to settle for less than delightful. Version 1.2.0 is live. Give it a spin, fork it, break it, improve it. I cannot wait to read your Issues, merge your PRs, and see where we take this next.